It was always obvious that at the end of the election campaign there would be a slew of articles declaring that it had or hadn’t been “the internet election”. I decided back in March to start collecting examples of campaigning and media innovation around the election and putting them into a digital election timeline, so that when we got to polling day I’d have a timeline of events.

I chose to use Dipity as my tool. The free version allows you to create up to three timelines. Within a topic you give each event a title and a timestamp. Optionally you can add a description, image and link URL to each event. Dipity then builds a timeline using Flash, and the events can also be viewed as a plain chronological list, or in a ‘flip-book’ format. If you link to a video on YouTube, Dipity automatically embeds the video in the timeline.

To get the data to go into the timeline I relied quite heavily on Twitter. I made sure that I subscribed to the Twitter streams of the major parties, and to election Twitter streams from broadcasters like Channel 4 and the BBC.

During the day, every time I saw a link on Twitter that I thought might lead to an interesting bit of the digital campaign I marked it as a favourite. At night, I would then spend 20 minutes looking back through the day’s favourites, taking screengrabs, and entering the details into Dipity. I also had help from various people within news organisation who began sending me messages about content and services they had launched.

The timeline has around 150 events in it now, and I’ve been continuing to update it in the aftermath of the indecisive result.

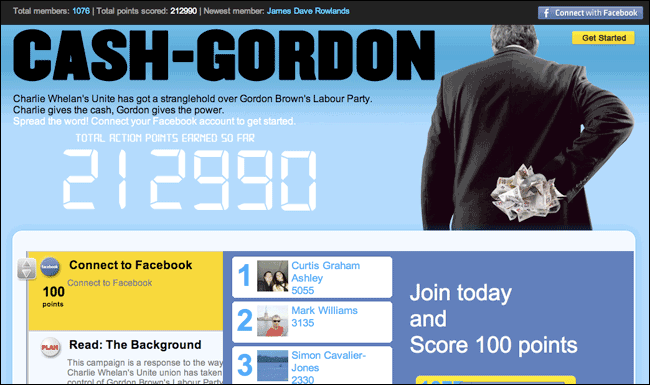

A few things stood out. From the political parties, the Conservatives #cashgordon Twitter fiasco was amusing, but worrying. It seemed that the people who look likely to be commissioning the nation’s digital infrastructure in the next couple of years couldn’t commission a website which got basic security right. Worse, instead of holding their hands up to a “Web security 101” SNAFU, they tried to shift the blame to “left-wing” hackers, an example of tribal politics at it’s worst.

For their part, the Labour decision to turn their homepage over to a Twitterstream during the Leader’s Debates was a brave, but I believe, misguided one. First time visitors would have been perplexed by it, just at a moment when the nation was focused on politics and they had a chance to introduce floating voters to key elements of the Labour manifesto.

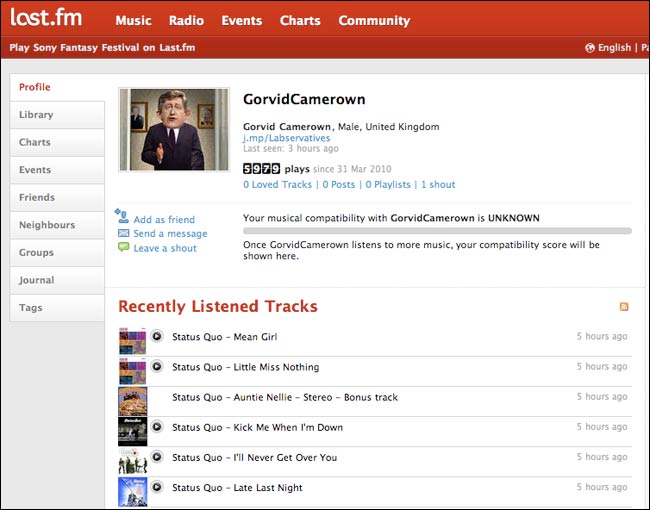

The Liberal Democrats Labservative campaign was a favourite of mine for sheer attention to detail. Fictional leader Gorvid Camerown even had a profile on Last.fm, where he enjoyed nothing but the status quo. The campaign was clever, but whether it increased the Liberal Democrat vote is impossible to judge.

It seemed to me that as the campaign progressed, the cycle of social media reaction got faster and faster. There were plenty of spoof political posters early in the campaign, but on the eve of the poll it seemed like it took less than ten minutes for the first spoofs of The Sun’s Cameron-as-Obama front page to appear. Likewise, within minutes of the Landless Peasant party candidate appearing behind Gordon Brown giving a clenched fist salute in Kirkcaldy, a Facebook fan page for Deek Jackson had been set up and attracted over 500 joiners. It has now reached 4,000.

It has been a really interesting exercise. I definitely feel that compiling the digital election timeline personally kept me much more engaged in the campaign.

Would I do the timeline differently in the future? In retrospect I may have been better off putting the events into a mini-blog service like Tumblr, and powering the Dipity version from that. As it is, the data is locked into Dipity, and can’t be indexed by search or exported. I have though uploaded around 100 of the screengrabs and images I used to a Flickr set so that people can re-use them.