If there were one key word in open data it would be “re-use”, according to Open Knowledge Foundation community coordinator Jonathan Gray.

Speaking on an open data panel at the International Journalism Festival, Gray said the freedom to re-use open government data is what makes it distinctive from the government information that has been available online for years but locked up under an all rights reserved license or a confusing mixture of different terms and conditions.

Properly open data, Gray said, is “free for anyone to re-use or redistribute for any purpose”.

The important thing about open data is moving from a situation of legal uncertainly to legal clarity.

And he sketched out in his presentation what the word “open” should mean in this context:

Open = use, re-use, redistribution, commerical re-use, derivative works.

The Open Knowledge Foundation promotes open data but most importantly, Gray said, was finding beneficial ways to apply that data.

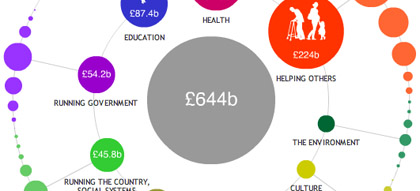

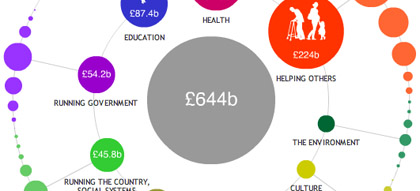

Perhaps the signal example from the foundation itself is Where Does My Money Go, which analyses data about UK public spending.

Open Knowledge Foundation projects like Where Does My Money Go are about “giving people literacy with public information”, Gray said.

Nothing will replace years of working with this information day in and day out, and harnessing external expertise is essential. But the key is allowing a lot more people to understand complex information quickly.

Along with its visualisation and analysis projects, the foundation has established opendefinition.org, which provides criteria for openness in relation to data, content, and software services, and opendatasearch.org, which is aggregating open data sets from around the world. See a full list of OKF projects at this link.

“Tools so good that they are invisible”

This is what the open data movement needs, Gray said, “tools that are so good that they are invisible”.

Before the panel he suggested the example of some of the Google tools that millions use every day, simple effective open tools that we turn to without thinking, that are “so good we don’t even know that they are there”.

Along with Guardian data editor Simon Rogers, Gray was leaving Perugia for Rome, to take part in a meeting with senior Italian politicians about taking the open data movement forward in Italy. And he had been in France the week before talking to people about an upcoming open data portal in France – “there is a lot of top level enthusiasm for it there”.

In an introduction to the session, Ernesto Belisario president of the Italian Association for Open Government, revealed enthusiasm for open data is not restricted to larger, more developed countries.

Georgia has established its own open data portal, opendata.ge, and according to Belisario, took out an advert to promote the country’s increasing transparency ranking.

Some are expensive – the US, which began open government data publishing with data.gov, spend £34 million a year maintaining the various open data sites.

Others are cheap by comparison, with the UK’s opendata.gov.uk reportedly costing £250,000 to set up.

Some countries will pioneer with open data, some will bitterly resist. But with groups like the Open Knowledge Foundation busy flying representatives around the world to discuss it, that movement “from legal uncertainty to legal clarity” seems likely to move from strength to strength.

See Gray’s full presentation at this link.

See more from #ijf11 on the Journalism.co.uk Editor’s Blog.