The excellent Nicola Hughes, author of the Data Miner UK blog, has a very practical post up about how she scraped and cleaned up some very messy Cabinet Office spending data.

Firstly, I scraped this page to pull out all the CSV files and put all the data in the ScraperWiki datastore. The scraper can be found here.

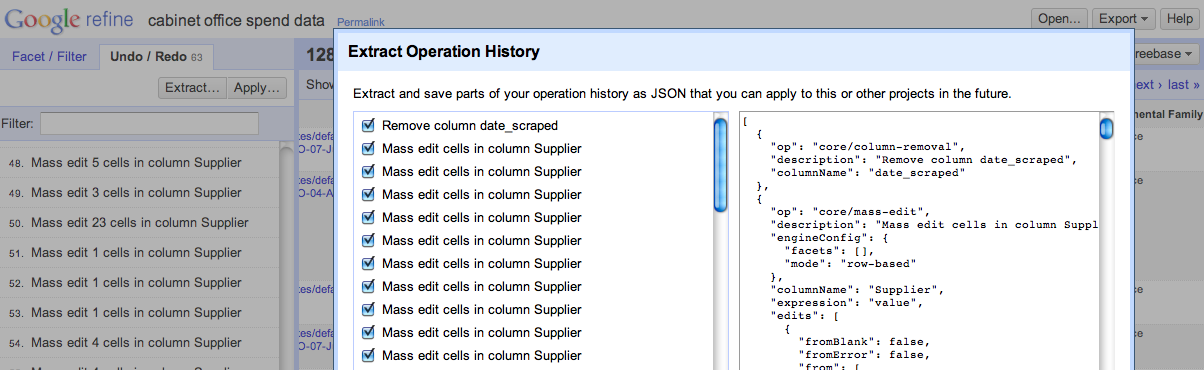

It has over 1,200 lines of code but don’t worry, I did very little of the work myself! Spending data is very messy with trailing spaces, inconsistent capitals and various phenotypes. So I scraped the raw data which you can find in the “swdata” tab. I downloaded this and plugged it into Google Refine.

And so on. Hughes has held off on describing “something interesting” that she has already found, focusing instead on the technical aspects of the process, but she has published her results for others to dig into.

Before I can advocate using, developing and refining the tools needed for data journalism I need journalists (and anyone interested) to actually look at data. So before I say anything of what I’ve found, here are my materials plus the process I used to get them. Just let me know what you find and please publish it!

See the full post on Data Miner UK at this link.

Nicola will be speaking at Journalism.co.uk’s news:rewired conference next week, where data journalism experts will cover sourcing, scraping and cleaning data along with developing it into a story.