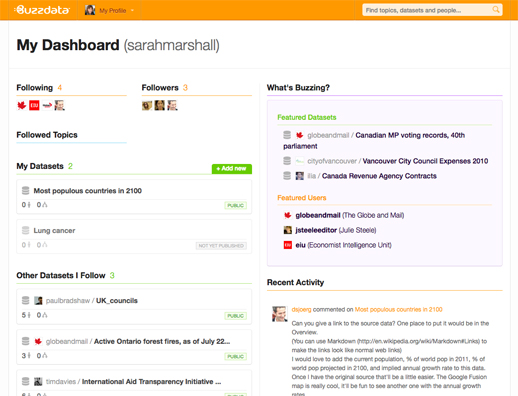

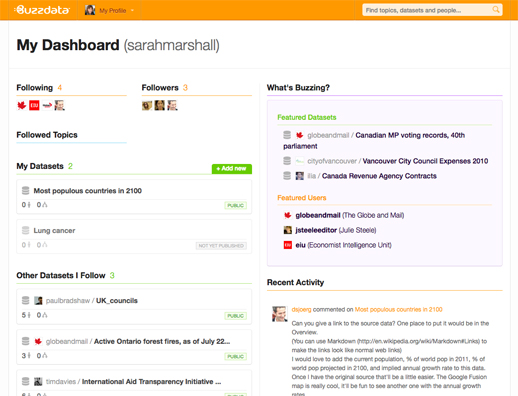

Data gets its own social network today (2 August), with the launch of BuzzData, which its CEO describes as “a cross between Wikipedia for data and Flickr for data”.

BuzzData is due to launch in public beta later when Canada, where the start-up is based, wakes up.

It launched in private beta last week to allow a few of us to test it out.

What is BuzzData?

BuzzData is a “social network for people who work with data”, CEO Mark Opausky told Journalism.co.uk.

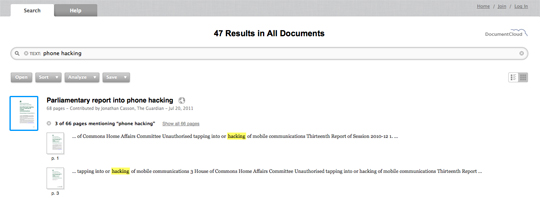

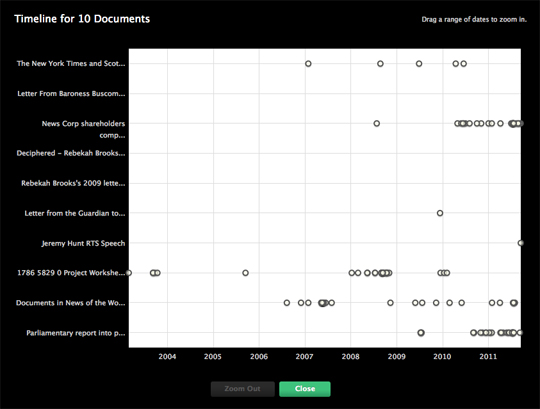

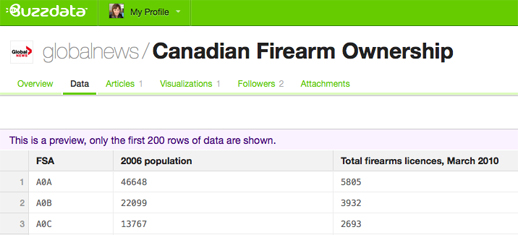

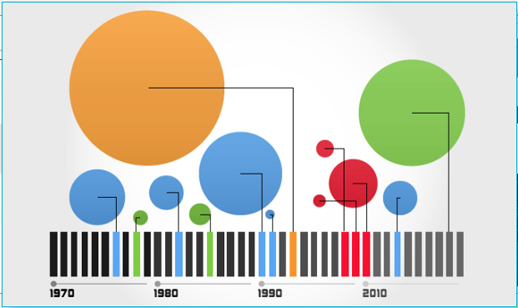

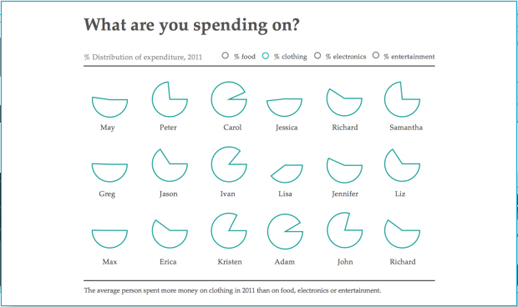

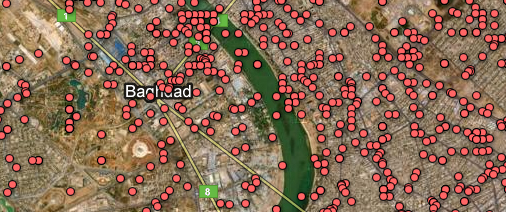

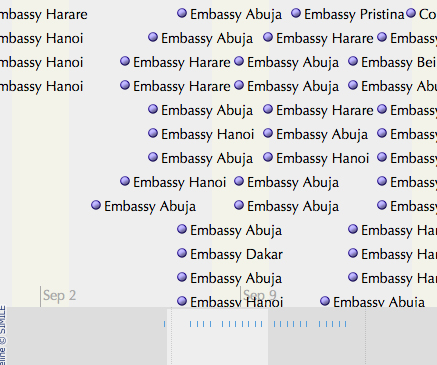

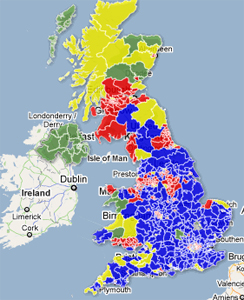

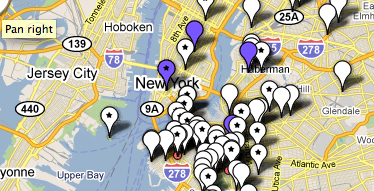

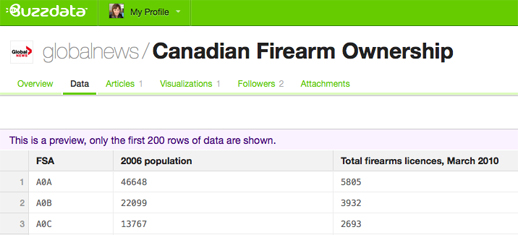

Users can upload data, data visualisations, articles and any background documentation on a topic or story. Other BuzzData users can then follow your data, comment on it, download it and clone it.

Members of the Toronto-based team hope the platform will be a space where data journalists come together with researchers and policy makers in order to innovate.

They have thought about who could potentially use the social network and believe there are around 15 million people who deal with statistics – whether that data be around sport, climate change and social inequalities – and who are “interested in seeing the data and the conversation that goes on around certain pieces of data”, Opausky said.

We are a specialised facility for people who wish to exchange data with each other, share data, talk about it, converse on it, clone it, change it, merge it and mash it up with other data to see what kind of innovative things may happen.

BuzzData does not allow you to create data visualisations or upload them in a way which makes beautiful graphics immediately visible. That is what recently-launched tool Visual.ly does.

How is BuzzData of use to journalists?

BuzzData allows you to share data either publicly or within a closed network.

Indeed, a data reporter from Telegraph.co.uk has requested access to see if BuzzData could work for the newspaper as a data-publishing platform, according to a member of BuzzData’s team.

Opausky explained that journalists can work by “participating in a data conversation and by initiating one” and gave an example of how journalism can be developed through the sharing of data.

It allows the story to live on and in some cases spin out other more interesting stories. The journalists themselves never know where this data is going to go and what someone on the other side of the world might do with it.

Why does data need a social network?

Asked what sparked the idea of BuzzData, which has secured in excess of $1 million funding from angels investors, Opausky explained that it was down to a need for such a tool by Peter Forde, who is chief technology officer.

He had spent many years studying the data problem and he was frustrated that there wasn’t some open platform where people could work together and share this stuff and he had a nagging suspicion that there was a lot of innovation not happening because information was siloed.

Going deeper than that, we recognised that data itself isn’t particularly useful until you can put it into context, until you can wrap it around a topic or apply it to an issue or give it a cause. And then even when you have context the best, at that point, you have is information and it doesn’t become knowledge until you add people to it. So his big idea was let’s take data, let’s add context and lets help wrap communities of people round this thing and that’s where innovation happens.

You can sign up for BuzzData at this link. Let us know what you think by leaving a comment below.