The University of Central Lancashire’s Journalist Leaders Programme has secured €75,000 (£64,000) of Google funding to support “news entrepreneurs” after being named as one of three winners of the International Press Institute’s News Innovation Contest.

The programme, founded by researcher, academic and consultant on newsroom and digital business innovation François Nel (pictured), will develop a project called Media and Digital Enterprise (MADE), to offer an “innovative training, mentoring and research programme”.

The funding awarded by IPI will be spent by the UCLan programme on working “to create sustainable news enterprises – whether for social or commercial purposes – by helping innovators”.

Nel told Journalism.co.uk MADE will “support the entire news ecosystem as we need innovation across the sector”.

He is now looking for people with entrepreneurial ideas who are interested in news innovation.

The other two winners of the contest are Internews Europe, a European non-profit organisation created in 1995 to help developing countries establish and strengthen independent media organisations to support freedom of expression and freedom of access to information, alongside the World Wide Web Foundation, a Swiss public charity founded by Sir Tim Berners-Lee, the inventor of the world wide web.

In February Google announced it was awarding $2.7 million to the Vienna-based IPI for its contest.

There were round 300 applicants, reduced first to 74 and then to 26 before the three winners were selected by a panel of seven judges, including journalism professor and commentator Jeff Jarvis.

The winners of the total fund of $600,000 were announced yesterday; Nel heard this morning how much the MADE project is being allocated, telling Journalism.co.uk “it’s fantastic to have support for news innovations”.

Nel and others working on the Leaders Programme have been working with news organisations, including Johnston Press, Trinity Mirror and the Guardian Media Group, looking at digital processes and innovative business models.

MADE allows us to pull those strands together and work with directly with news entrepreneurs. And we’re really excited about the possibility of putting this to the test.

Nel explained that MADE will “deliver good skills for a whole range of news start-ups” and he is now “looking to work with individuals, groups and companies, who are interested in news innovation” to get involved.

The project will help develop new skills and test the business plans, offering bespoke support to those with entrepreneurial ideas.

We’re looking to support five good people and good ideas for at least three months so that we can give those ideas legs.

The project includes various partners that were part of the bid, including one to build content and one to build communities.

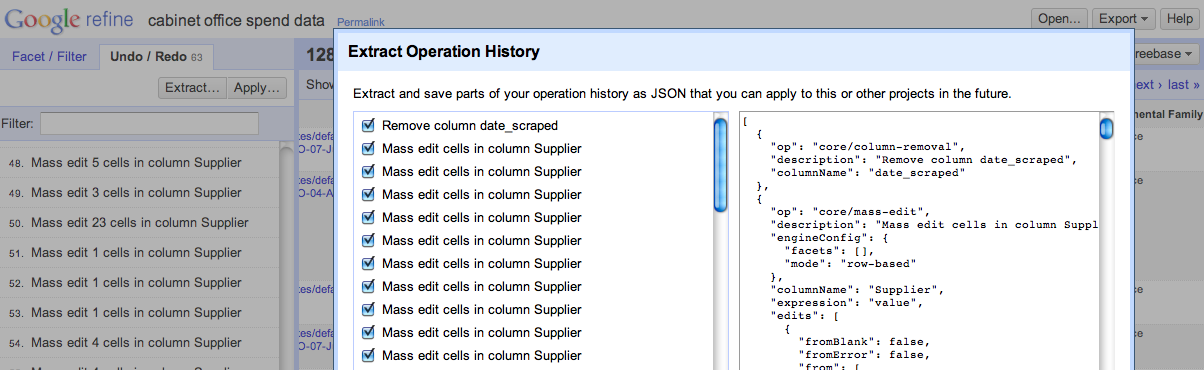

Developers at ScraperWiki will be working with the project to develop innovations in data journalism and build content. Another partner is Sarah Hartley who is now working on the Guardian’s social, local, mobile project n0tice, with this area of the project focusing on building communities.

MADE will also involve Nel’s colleagues at Northern Lights, an award-winning business incubation space at UCLan.

The project also has an international element, involving groups in Turkey, drawing on Nel’s connections in the country.

Nel explained why the funding and ongoing support from IPU is vital.

In the digital news media space the cyber world is littered with start ups. The corpses of news start ups are every here. What we really need to do is help news entrepreneurs stay up and that’s what we are trying to do here.