The main difficulty for data journalist in the UK is gaining access to meaningful data, Heather Brooke said in her keynote speech at news:rewired – noise to signal.

Brooke, a journalist, author and freedom-of-information campaigner, who is best known for her role in bringing the MPs expenses to light and who went on to work with the Guardian on the WikiLeaks cables, compared the difficulty in accessing data in the UK compared with the US, where she trained and worked as a political journalist and a crime reporter.

When working in the US, Brook explained how she was “heavily reliant on public records” and said the “underpinning of my journalism was state records”. As a crime reporter she used a police scanner, likening it to those familiar with US series ‘The Wire’.

“As a journalist I would decide what the story was,” she said, based on the data from public records. She was able to note patterns in the incident reports and able to notice a spate in domestic violence, for example.

Brooke told of how many UK police forces limit the release of their data to media messages left on a voice bank.

Public bodies in the UK “control the data, they control the public perception of the story,” she said.

“How do any journalists in the UK do their job?” she asked. And it was that problematic question that led her to becoming an FOI campaigner.

When she asked for receipts for US politicians’ expense claims in the States, she had them within a couple of days.

It was a different story in the UK. It took her five years and several court cases, including taking the case to the High Court which led to the release of second home allowance for 10 MPs.

The House of Commons “sticking their feet on the ground” refused to release further data, which had been scanned in by the fees office.

A CD of the data which was touted round Fleet Street and sold for £110,000.

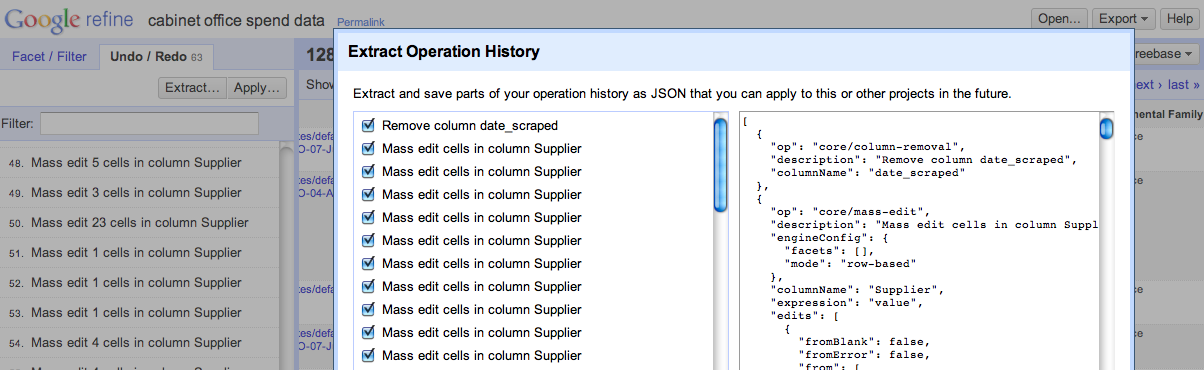

The Telegraph, rather than Brooke, then had the data and had to verify and cross check it.

What is purpose as journalists in the digital age?

Brooke’s answer to that question is that “we need to change an unhelpful attitude” of public records being withheld.

“The information exists as if they own it”, she said.

“They don’t want negative information to come out” and they want to try and manage their reputation, she said in what she described as “the take over of public relations”.

“We need to be campaigning for these sets of data” and gave the examples of courts and the release of files.

“We make the FOI request and that should open the whole tranche of data so any other journalist can go back and use it for their reporting.”

She said data journalism is “not just about learning how to use Excel spreadsheets but you have to have something to put in those spreadsheets”.

Brooke made a “rallying cry” as to why professional journalists, particularly those who practice investigative journalism, are vital.

The “one unique selling point, why people would come to a professional news organisation” is the training and experience journalists have in “sifting through for what is important and what is true”.

Brooke said as people have more and more information, a journalist’s role is distilling and signposting the information.

The second key point she made is journalists must establish “what is true”.

When a politician claims that crime has gone down, a journalist must be able to verify it and “test the truthfulness” of it, she said.

She explained that journalists need to know how that data was collected and, ideally, have access the data itself.

Brooke told how she tried to pitch stories on MPs expenses on an almost daily basis before they came to light. She said editors thought it was a non-story and “almost took the word of parliament” and had the perception that the public was not interested. But they were.

“It’s a symptom of the public not having meaningful information and are not able to take action. That’s our role as professional journalists.”

This article is a cross post. It was originally published on news:rewired.